- Json Schema Generator

- Json Schema Types

- Json Schema Cheat Sheet Pdf

- Json Schema Cheat Sheet Excel

- Json Schema From Json

- Create Json Schema

However, if the webmaster is using JSON-LD, then you should look at the content elements to see how they are breaking down schema markup and tags. Schema Cheat Sheet & Schema Checklist. SEO is all about getting in before competitors do on a certain strategy.

- JSON stands for JavaScript Object Notation. JSON is a lightweight format for storing and transporting data. JSON is often used when data is sent from a server to a web page. JSON is 'self-describing' and easy to understand.

- One-page guide to Knex: usage, examples, and more. Knex is an SQL query builder for Node.js.This guide targets v0.13.0.

Overview

- Serverless ETL (extract, transform, load) service

- Uses Spark underneath

Glue Crawler

- This is not crawler in the sense that would pull data from data sources

- A crawler reads data from data sources ONLY TO determine its data structure / schema

- Crawls databases using a connection (actually a connection profile)

- Crawls files on S3 without needing a connection

- After each crawl, virtual tables are created in Data Catalog, these tables stores meta data like the columns, data types, location, etc. of the data, but not the data itself

- Crawler is serverless, you pay by Data Processing Unit (time consumed for crawling), but there is a 10 minute minimum duration for each crawl

- You can create tables directly without using crawlers

Crawling Behaviors

- For database tables, it is easy to determine data types for columns, but crawler mostly shines on file based data (i.e. data lake)

- The crawler determines column and data type by reading data from the files and “guess” the format based on patterns and similarity of the data format for different files

- It creates tables based on the prefix / folder name of similarly-formatted files, and create partitions of tables as needed

- It uses “classifiers” to parse and understand data, classifiers are sets of pattern matchers

- User may create custom classifiers

- Classifiers are categorized based on file types, e.g. the Grok classifier is for text based files

- There are JSON and CSV classifiers, they are for respected file types

- Classifier will only classify file types into their primitive data types, for example, even if a JSON contains ISO 8601 formatted timestamp, the crawler will still see it as a

string

Glue Data Catalog

- An index to the location, schema and runtime metrics of your data

- Connections

- This is actually connection configuration for Glue to connect to databases

- If you access S3 via a VPCE, then you also need a

NETWORKtype connection- Creating

NETWORKtype connection without a VPCE will cause “Cannot create enum from NETWORK value!” error which is very confusing

- Creating

- If you access S3 via its public endpoints then no connection is required

Glue ETL

- Glue ETL is EMR made serverless

- It runs Spark underneath

- User can create Spark jobs and run it directly without provisioning servers

- Glue ETL provisions EMR clusters on-the-fly, so expect 5-10 minutes cold start time even for the simplest jobs

- Glue has built-in helpers to perform common tasks like casting

stringtotimestamp(you will need this for crawled JSONs) - ETL is useful for

- Type conversion for columns

- Compress data from plain format (text, log, CSV, JSON) to columnar format (parquet)

- Data joining, so data can be scanned more easily

- Other data transforming and manipulation

- ETL Jobs can be connected to form a Workflow, every Job in the workflow can be Triggered manually or automatically

Dev Endpoint

- Glue ETL provisions EMR for every Job run, so it is too slow for development purposes

- If you are developing your Spark script and want to have an environment that is in the cloud and has access to the resources, use Dev Endpoint

- Basically Glue provisions an EMR cluster that is long-running (and long-charging) for you as a dev environment, remember to delete the endpoint after you are done to prevent bill overflow

This post is a reference of my examples for processing JSON data in SQL Server. For more detailed explanations of these functions, please see my post series on JSON in SQL Server 2016:

Additionally, the complete reference for SQL JSON handling can be found at MSDN: https://msdn.microsoft.com/en-us/library/dn921897.aspx

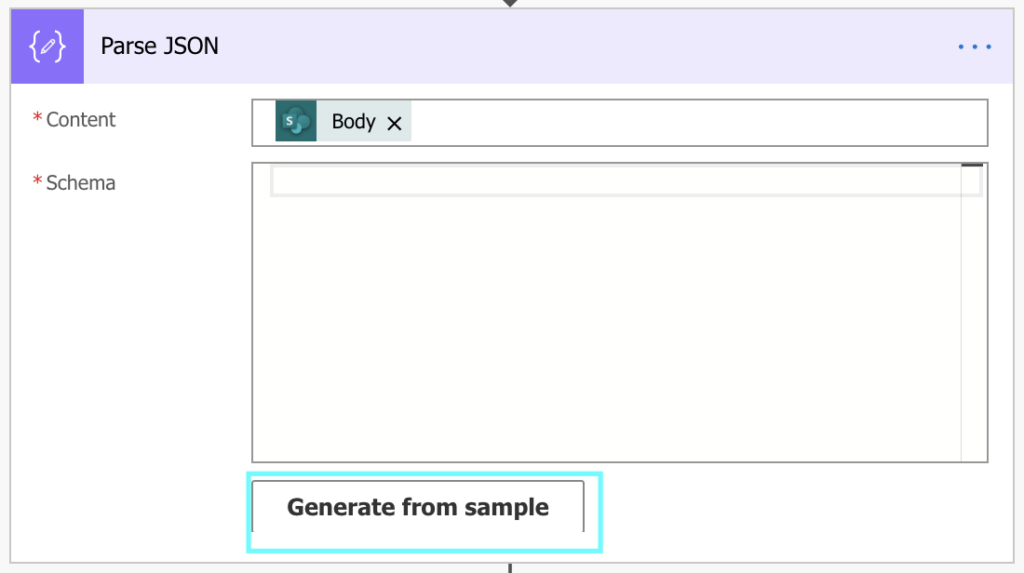

Parsing JSON

Getting string JSON data into a SQL readable form.

ISJSON()

Checks to see if the input string is valid JSON.

JSON_VALUE()

Extracts a specific scalar string value from a JSON string using JSON path expressions.

Strict vs. Lax mode

If the JSON path cannot be found, determines if the function should return a NULL or an error message.

JSON_QUERY()

Returns a JSON fragment for the specified JSON path.

This is useful to help filter an array and then extract values with JSON_VALUE():

OPEN_JSON()

Returns a SQL result set for the specified JSON path. The result set includes columns identifying the datatypes of the parsed data.

Creating JSON

Creating JSON data from either strings or result sets.

FOR JSON AUTO

Automatically creates a JSON string from a SELECT statement. Quick and dirty.

Json Schema Generator

FOR JSON PATH

Formats a SQL query into a JSON string, allowing the user to define structure and formatting.

Modifying JSON

Updating, adding to, and deleting from JSON data. Cisco easy vpn client.

JSON_MODIFY()

Json Schema Types

Allows the user to update properties and values, add properties and values, and delete properties and values (the delete is unintuitive, see below).

Free power geez 2018. Modify:

Add:

Delete property:

Delete from array (this is not intuitive, see my Microsoft Connect item to fix this: https://connect.microsoft.com/SQLServer/feedback/details/3120404/sql-modify-json-null-delete-is-not-consistent-between-properties-and-arrays )

SQL JSON Performance Tuning

Json Schema Cheat Sheet Pdf

SQL JSON functions are already fast. Adding computed columns and indexes makes them extremely fast.

Computed Column JSON Indexes

Json Schema Cheat Sheet Excel

JSON indexes are simply regular indexes on computed columns.

Json Schema From Json

Add a computed column:

Add an index to our computed column:

Create Json Schema

Performance test: